Author

Arjan Neef

Testing, research and development

Website protection

To familiarize myself with ethical hacking I decided to fiddle with a few hack-the-box and capture the flag examples. This is done to see how security can be breached and what steps to take to prevent this. With capture the flag challenges you basically download a virtual environment for you to try and breach without doing any harm. After a few challenges I was able to see a standard approach that can be projected to the real world too. The steps are the same, but the outcome will vary.

Gathering information

Capturing a flag (or hacking) always start by collecting information about the target website or machine. I was a bit surprised to see how easy it is to collect basic and useful information about the target machine. It’s actually just like breaking in. First you try to find the doors, then look at the locks on the door and check how old it is. For a website this is just the same. First you look at the website to try and find ways to gain access (how many doors), what software is used (what lock is used) and what version this software has. (how old is the lock). Once you’ve gathered this information you write down how you collected it so you can automatically retrace your steps later on.

I collected some information about shops in my neighborhood and discovered that most of the shops use WordPress with default login page and mostly out date software. This is no problem yet but having this information could be valuable one day, depending on the available exploits in the future. It also tells me how well the website is maintained. The more doors that can be found and the older the software the more likely this website will be hacked in the future. I can rank the shops in my neighborhood based on website security, which feels bad but that’s just how it is. This ranking is actually not hacking at all, it’s just gathering information. Just like a burglar checks the houses to rob, he first investigates before the hit.

With computers however, this investigation is done automatically and really fast. A single computer can scan many websites in a matter of seconds, just let it run for a while and you’ll get a nice list or websites ranked by security. Quess what websites will be targeted first? Now what to do with this information?

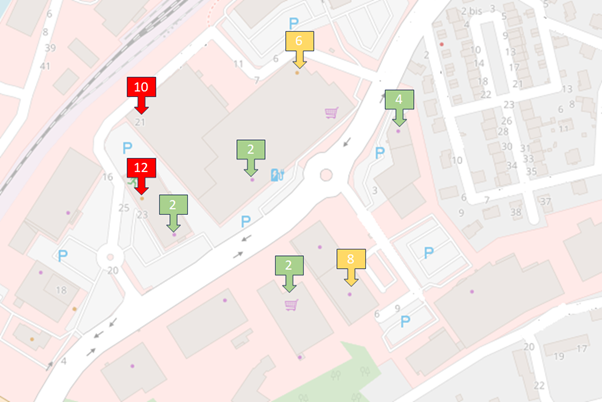

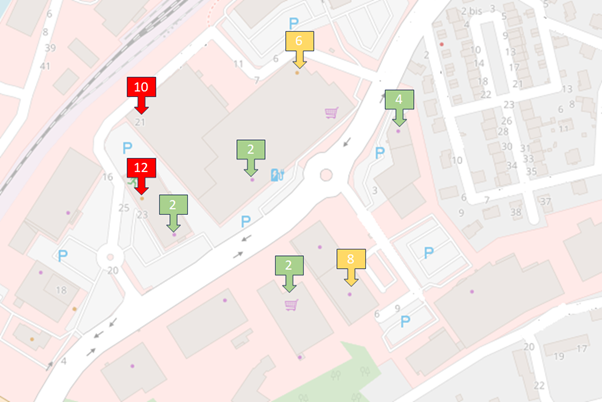

Take a look at this map, it contains a random selected area containing shops and factories. I won’t disclose the origin of this location as I do not intend to do any harm. I’ve marked the locations with a score. The higher the score, the more likely a hacker will be interested and try to gain access. This won’t mean the site is hacked, it just classifies the website of this location so it be compared to the others. The scoring works like this:

- Each irregular door than can be found will score 1 point

- Every identified application behind a door will score 1 point

- Every known vulnerability of a found application will score 1 point

- Every outdated application found will score 1 point

- Every hidden login page that can easily be found will score 1 point

As you can see, I haven’t weighted the threats itself. Some vulnerabilities will be more dangerous than others so I will only count those that actually pose a threat. Scoring 0 points is impossible in this test and a high score doesn’t mean that there is a problem. It’s just used for the ranking. You might be wondering why an outdated application scores a point too even though that doesn’t mean it’s vulnerable. That’s true, but it tells you something about the awareness or availability of the administrator. This is also a weakness.

Known vulnerabilities

Now that there is a lot of information available it’s time to see what known flaws there are. The good (and bad) thing is that this information is available at many different websites. If a new flaw is reported then system administrators need to see if their machines are compromised. Hackers will use this information to see if they have spotted machines containing these flaws. Who will be first? Is there nothing you can do to beat this before it turns into a chicken race? Yes of course, but it requires some basic knowledge and skills.

What you could do (as a start) is to make it harder for hackers to gather the information in the first place. I call it hiding, changing or cloaking your information so that a hacker won’t find the information he is looking for. Although this will not really improve your security it will certainly lower your changes of being discovered. If they cannot find or see a door then how are they suppose to walk through it? They’ll probably use the next door instead. It is no guarantee though, but still, make sure your website works 100% after applying these changes as some (badly written) applications don’t expect these changes.

What’s next?

Now the basic information is collected it’s time to analyze this information and see if you should contact the owner of the site or go ahead and start more investigation. Next to the known vulnerabilities you’ve probably also discovered one (or more) locations that allows you to log into the website or server. Trying to gain access here will probably not go unnoticed. But not all administrators are constantly monitoring their machines so you could try to see how far you het. Brute-force login attempts based on known default user/password lists will then be the next step or some good guessed username / password tries. If the website allows you to do this it is poorly protected as brute-force login attempts should be blocked. With the capture the flag challenges brute-force login attempts and directory browsing is an accepted approach as there is no harm to be done. This won’t help you on real websites though. Well,… it shouldn’t but some still do.

Some reoccurring vulnerabilities are:

- Use corrupt user input to trigger something internal. (SQL injection)

- Package update faults (adding new vulnerabilities with a new version)

- Update of leaked usernames and password files (password leaks)

- Post link/data on the website linking or redirecting to malicious website(s)

- Send fishing emails containing a fabricated login page to grab credentials

Keeping an eye out

Now that the target machine has been identified and the most important knowledge has been collected it’s time to move on. But before you do that write down all important information. You’ll be waiting for an exploit to be reported and once the exploit is there you’ll immediately know on what machines the exploit will work. This is what real hackers do, collect information and wait. Well, not actually wait but move on to the next. Occasionally revisit the sites to see if anything has changed and update the information. You’ll have hundred if not thousand machines tracked down in no time. Once an exploit is there it’s time to visit these sites again and use the vulnerability to gain access or do other nasty things.

Real hacking

The above steps are mainly used to identify possible weak targets and are run by computer scripts automatically. But once a hacker looks at the result of his automated scans he will start with the weakest websites. Now the hacking will change from automatically scanning a website to manually peeking and poking around. If there is a weak spot you better hope it won’t be found.

Want to know how well you’ll score from automated scans? I can run a scan and inform you about the weak points. Peeking and poking around is the fun part, but I won’t do that until approved of course.